Applied Deep Learning & Artificial Intelligence Program

Program Duration: 3 months

The Outcome

The Applied Deep Learning and Artificial Intelligence program starts with basic concepts of Mathematics and Statistics for AI. We then move to Neural Networks and various other Deep Learning Algorithms like CNNs, RNNs, Attention Models and many others with applications of these techniques in Speech and Vision. In the Second phase of the program, we cover advance Deep Learning Models like Reinforcement Learning, Hopfield networks and their applications in various industries. The last leg will cover, how to fine-tune your models in a production environment and use Deep Learning Models for Problem Solving also learning the art of critical thinking along the way. You will learn to apply Deep Learning in three application areas Natural Language Processing, Speech Recognition, Computer Vision.

The Details

The Applied Deep Learning and Artificial Intelligence program runs for 12 weeks and is subdivided into multiple courses.

- Includes 250 hours of in-class instruction and hands-on sessions, 180 Hours of in-person classes and 70 hours of webcast classes

- Two 4-day in-person immersive sessions and two 2-day in-person immersive 2 sessions will be held in the program

- In-person classes will be held one day every weekend

- Webcast classes will be held for 4 hours on weekdays

Program Highlights

Applied Deep Learning & Artificial Intelligence Program Curriculum

Application Projects

Phase I

- Computer Vision (auto-colorization of b/w images)

- Video Summarization

- Attention Models and End to End Language Modelling

- Speech Recognition

Phase II

- Medical Image Analysis

- Spam Detector

- Tracking suspicious movement for Security

- Q & A Systems

Phase III

- Conversational Systems

- Stock Broking with Deep Q Learning

- Recommender Systems with Deep Learning

- Detect command and Control (C&C) Centre using ML and DL models

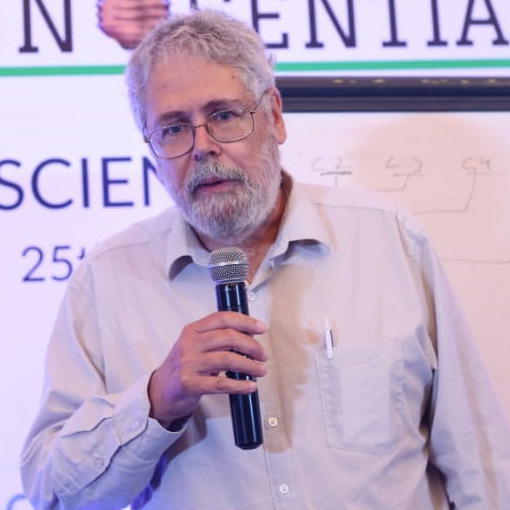

Professor Bhiksha Raj, Fellow IEEE

Language Technologies Institute, School of Computer Science, Carnegie Mellon University

Professor Bhiksha Raj is an expert in the area of Deep Learning and Speech Recognition and has two decades of experience. He has been named to the 2017 class of IEEE fellows for his "contributions to speech recognition," according to IEEE. He is the main Instructor of 11-785, Carnegie Mellon University’s Official Deep Learning Course, which is followed by thousand of researchers worldwide.

Dr. Sarabjot Singh Anand

Co-Founder and Chief Data Scientist at Tatras Data

Dr. Sarabjot Singh Anand is a Data Geek. He has been involved in the field of data mining since the early 1990s and has derived immense pleasure in developing algorithms, applying them to real-world problems and training a host of data analysts in the capacity of being an academic and data analytics consultant.

Dr. Vikas Agrawal

Senior Principal Data Scientist @ Oracle Analytics Cloud

Vikas Agrawal works as a Senior Principal Data Scientist in Cognitive Computing for Oracle Analytics Cloud. His current interests are in automated discovery, adaptive anomaly detection in streaming data, intelligent context-aware systems, and explaining black-box model predictions.

Mr. Mukesh Jain

Analytics, AI, ML & DL Leader (ex-Microsoft, ex-Jio)

Mukesh Jain is Practitioner of Analytics, AI, ML & DL Leader since 1995.

He is Technologist, Techno-Biz Leader, Data Scientist, Author, Coach and Teacher.

Professor Joao Gama

University of Porto, Director LIAAD

Joao Gama is Associate Professor of the Faculty of Economy, University of Porto. He is a researcher and the Director of LIAAD, a group belonging to INESC TEC. He got the PhD degree from the University of Porto, in 2000. He has worked in projects and authored papers in areas related to machine learning, data streams, and adaptive learning systems and is a member of the editorial board of international journals in his area of expertise.

Professor Ashish Ghosh

Indian Institute of Statistics, Kolkata

Professor Ashish Ghosh Professor in the Machine Intelligence Unit, Indian Statistical Institute, Calcutta. Short Biography: Ashish Ghosh is a Professor of the Machine Intelligence Unit and the In-charge of Center for Soft Computing Research at the Indian Statistical Institute, Calcutta.

Professor Jaime Carbonell

Director LTI, Carnegie Mellon University, USA

Jaime is Director and Founder of the Language Technologies Institute and Allen Newell Professor of Computer Science at Carnegie Mellon University. He is a world-renowned expert in the areas of information retrieval, data mining and machine translation. Jaime co-founded and took public Carnegie Group, a company in the IT services market employing advanced artificial-intelligence techniques.

Dr. Derick Jose

Co-founder, Flutura Decision Sciences & Analytics

Derick is the co-founder of Flutura Decision Sciences a niche AI & IIoT company focussed on impacting outcomes for the Engineering and Energy Industries. Flutura has been rated by Bloomberg as one of the fastest growing machine intelligence companies and its AI platform Cerebra has been certified to work with Halliburton and Hitachis platforms.

Mr. Joy Mustafi, Director and Principal Researcher at Salesforce

Visiting Scientists , Innosential

Winner of Zinnov Award 2017 - Technical Role Model - Emerging Technologies (Senior Level). Collaborated with the ecosystem by visiting around twenty-five leading universities in India as visiting faculty, guest speaker, advisor, mentor, project supervisor, panelist, academic board member, curricula moderator, paper setter and evaluator, judge of events like hackathon etc. Having more than twenty-five patents and fifteen publications on artificial intelligence in the recent past years.

Dr. Vijay Gabale

Co-founder and CTO Infilect

Deep learning enabled computer vision forms the core competence of Infilect products. Prior to cofounding Infilect, Vijay was a research scientist with IBM research. Vijay obtained his Ph.D. in Computer Science from IIT Bombay in 2012. Vijay has extensively worked on intelligent networks and systems by applying machine learning and deep learning techniques. Vijay has published research papers in top-tier conferences such as SIGCOMM, KDD and has several patents to his name.

Mr. Dipanjan Sarkar

Intel AI

Dipanjan (DJ) holds a master of technology degree with specializations in Data Science and Software Engineering. He is also an avid supporter of self-learning and massive open online courses. He plans to venture soon into the world of open-source products to improve the productivity of developers across the world.

Mr. Ajit Jaokar

Director of the Data Science Program University of Oxford

Ajit Jaokar's work is based on identifying and researching cross-domain technology trends in Telecoms, Mobile and the Internet.

Ajit conducts a course at Oxford University on Big Data and Telecoms and also teaches at City Sciences(Technical University of Madrid) on Big Data Algorithms for future Cities / Internet of Things.

Dr. Pratibha Moogi

ex-Samsung R&D

Dr. Pratibha Moogi holds PhD from OGI, School of Engineering, OHSU, Portland and Masters from IIT Kanpur. She has served SRI International lab and many R&D groups including Texas Instruments, Nokia, and Samsung. Currently she is serving as a Director in Data Science Group (DSG), in a leading B2B customer operation & journey analytics company, [24]7.ai.

Schedule & Price

Launch date: 27th Jan, 2019

Foundation of learning start date: 15th Mar, 2019

In person session start date: 25th Mar, 2019

Tuition Fee: Rs 1,10,000* (taxes extra). Monthly EMI starting at 5,791* INR only.

- Includes 250 hours of in-class instruction and hands-on sessions, 180 Hours hours of in-person classes and 70 hours of webcast classes.

- 7 Full Course

- 10 Project

- 120 Lectures

- 120 Lab Hours

Price

INR 1,10,000*

- 18+ Professional Speakers

- Networking Sessions

- Meet The Visiting Faculty

- Pitch To The VCS

Applied Deep Learning & Artificial Intelligence Program

Preparatory Course: Foundations of Learning AI

1. Mathematical Foundations of Data Science:

A. Linear Algebra:

- Vectors, Matrices

- Tensors

- Matrix Operations

- Projections

- Eigenvalue decomposition of a matrix

- LU Decomposition

- QR Decomposition/Factorization

- Symmetric Matrices

- Orthogonalization & Orthonormalization

- Real and Complex Analysis (Sets and Sequences, Topology, Metric Spaces, Single-Valued and Continuous Functions, Limits, Cauchy Kernel, Fourier Transforms)

- Information Theory (Entropy, Information Gain)

- Function Spaces and Manifolds

- Relational Algebra and SQL

B. Multivariate Calculus

- Differential and Integral Calculus

- Partial Derivatives

- Vector-Values Functions

- Directional Gradient

- Hessian

- Jacobian

- Laplacian and Lagragian Distribution

2: Probability for Data Scientists

- Probability Theory and Statistics

- Combinatorics

- Random Variables

- Probability Rules & Axioms

- Bayes' Theorem

- Variance and Expectation

- Conditional and Joint Distributions

- Standard Distributions (Bernoulli, Binomial, Multinomial, Uniform and Gaussian)

- Moment Generating Functions

- Maximum Likelihood Estimation (MLE)

- Prior and Posterior

- Maximum a Posteriori Estimation (MAP) and Sampling Methods

- Descriptive Statistics

- Hypothesis Testing

- Goodness of Fit

- Analysis of Variance

- Correlation

- Chi2 test

- Design of Experiments

3: Algorithms and Data Structures:

A. Graph Theory: Basic Concepts and Algorithms

B. Algorithmic Complexity

- Algorithm Analysis

- Greedy Algorithms

- Divide and Conquer and Dynamic Programming

C. Data Structures

- Array, List, Hashing, Binary Trees, Hashing, Heap, Stack etc

- Dynamic Programming

- Randomized & Sublinear Algorithm

- Graphs

4: R and Python

A. R Programming Language

- Vectors

- Matrices

- Lists

- Data frame

- Basic Syntax

- Basic Statistics

- Data Manipulation (dplyr)

- Visualization (ggplot2)

- Connecting to databases (RJDBC)

Python Programming Language

- Python language fundamentals

- Data Structures

- Beautiful Soup

- Regular Expressions

- JSON

- Restful Web Services (Flask)

- NumPy

- Plots in matplotlib, seaborn

- Pandas

Course I: Introduction to AI & Nature of Intelligence

The course offers fundamentals that you cannot find in any other course or a book. These fundamentals will be invaluable for your future work on ML and AI.

- This course explores the nature of intelligence, ranging from machines to the biological brain. Information provided in the course is useful when undergoing ambitious projects in machine learning and AI. It will help you avoid pitfalls in those projects.

- What are the differences between the real brain and machine intelligence and how can you use this knowledge to prevent failures in your work? What are the limits of today's AI technology? How to assess early in your AI project whether it has chances of success?

- What are the most fundamental mathematical theorems in machine learning and how they are relevant for your everyday work?

Course II: Introduction to Deep Learning

- Introduction to deep learning

- Course logistics

- History and cognitive basis of neural computation

- The perceptron / multi-layer perceptron

UNIT 2:

- The neural net as a universal approximator

UNIT 3A:

- Training a neural network

- Perceptron learning rule

- Empirical Risk Minimization

- Optimization by gradient descent

UNIT 3B:

- Single hidden layered networks and universal approximation

- Motivation for more than 1 hidden layer

- Feature engineering versus co-learning features and estimation

UNIT 4:

- Back propagation

- Calculus of back propagation

UNIT 5:

- Convergence in neural networks

- Rates of convergence

- Loss surfaces, Learning rates, and optimization methods

- RMSProp, Adagrad, Momentum

UNIT 6:

- Stochastic gradient descent

- Acceleration

- Overfitting and regularization

- Tricks of the trade:

- Choosing a divergence (loss) function

- Batch normalization

- Dropout

UNIT 7:

- Recap of Training Q&A session for students

UNIT 8:

- Optimization continued

Course III: Core Deep Learning

- Models of vision

- Neocognitron

- Mathematical details of CNNs, Alexnet, Inception, VGG

UNIT 9 B:

- Architecture

- Convolution, Pooling, Normalization

- Training strategies

- Visualizing and understanding convolutional networks

- Some MORE well-known convolution networks (LeNet/ZFNet/GoogleNet)

UNIT 10:

- Recurrent Neural Networks (RNNs)

- Modeling series

- Back propogation through time

- Bidirectional RNNs

UNIT 11:

- Stability

- Exploding/vanishing gradients

- Long Short-Term Memory Units (LSTMs) and variants

- Resnets

UNIT 12:

- Loss functions for recurrent networks

- Sequence Prediction

UNIT 13:

- Sequence To Sequence Methods

- Connectionist Temporal Classification (CTC)

UNIT 14:

- Sequence-to-sequence models

- Attention models, examples from speech and language

UNIT 15:

- What to networks represent

- Autoencoders and dimensionality reduction

- Learning representations

UNIT 16:

- Variational Autoencoders (VAEs)

UNIT 17:

- Generative Adversarial Networks (GANs) Part 1

UNIT 18:

- Generative Adversarial Networks (GANs) Part 2

Course IV: Advance Deep Learning Models

- Regularization

UNIT 20:

- Transfer Learning

UNIT 21:

- Hopfield Networks

- Boltzmann Machines

UNIT 22:

- Training Hopfield Networks

- Stochastic Hopfield Networks

UNIT 23:

- Restricted Boltzmann Machines

- Deep Boltzmann Machines

UNIT 24:

- Reinforcement Learning 1

UNIT 25:

- Reinforcement Learning 2

UNIT 26:

- Reinforcement Learning 3

UNIT 27:

- Reinforcement Learning 4

UNIT 28:

- Q Learning Deep Q Learning

- Case Study:Computer Vision (auto-colorization of b/w images; attention)

- Case Study:Natural Language Processing (caption generation, Word2Vec)

Course V: Artificial Intelligence & it's applications

- Image Processing:

- Projects: Medical image analysis -- pathology, tracking suspicious movement for security.

- Natural Language Processing:

- Projects: Spam detector, Q&A/Conversational systems.

- Speech:

- Project: Conversational systems.

- Deep Learning in Cyber Security

Our Address :

2/3, 2nd Floor, 80 Feet Road, Barleyz Junction, Sony World Crossing, Above, KFC, Koramangala, Venkappa Garden, Ejipura, Bengaluru, Karnataka 560034