Advance Machine Learning Program

Program Duration: 6 months

The Outcome

Upon graduating, you will be comfortable in designing, implementing models in Scalable Machine Learning, Advance Bayesian modeling, including knowing the fundamentals of advance machine learning and their applications in Recommender System, Social Network Analysis, Text Mining, and Natural Language Processing, and Data Streams. You will also apply massive data mining principles in an end to end IOT project.

The Details

Advance Machine Learning Program runs for 24 weeks and is subdivided into multiple courses.

- Includes 500 hours of in-class instruction and hands-on sessions, 360Hours of in-person classes and 140 hours of webcast classes with TA

- Four 4-day in-person immersive sessions and four 2-day in-person immersive sessions will be held in the program

- In-person classes will be held one day every weekend

- Webcast classes will be held for 4 hours on weekdays

Applied Machine Learning Program Curriculum

Application Projects

Phase I

- Network Intrusion detection

- Predictive Text Generation

- Churn Prediction

- Weather Forecasting

Phase II

- Customer Lifetime Modelling

- Speech synthesis

- Named Entity Extraction

- Diagnosis

Phase III

- Driving for Fuel Efficiency

- Car navigation

- Viral Marketing

Advance Machine Learning Program Curriculum

Application Projects

Phase I

- IOT Data Processing

- Spam Detector

- Dialogue Systems

- News Recommendation

- Q & A Systems

Phase II

- Sentiment Analysis

- Machine translation

- Text Summarization

- Natural Language Processing (caption generation, Word2Vec)

- Building Intelligent Recommender System

Phase III

- Conversational Recommender System

- Building Intelligent Information System

- Mining Massive Multimedia Data Sets

- Analyzing Social Networks at Scale

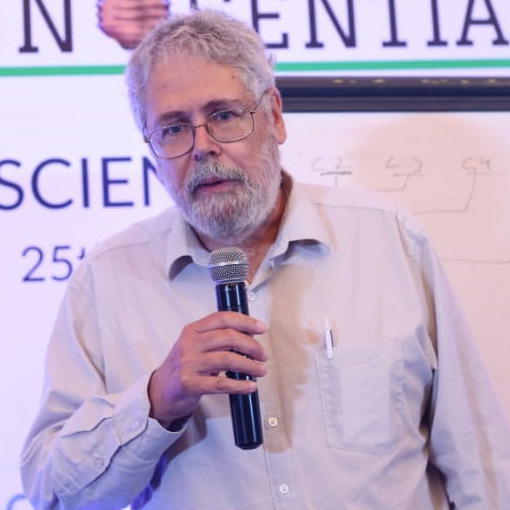

Professor Bhiksha Raj, Fellow IEEE

Language Technologies Institute, School of Computer Science, Carnegie Mellon University

Professor Bhiksha Raj is an expert in the area of Deep Learning and Speech Recognition and has two decades of experience. He has been named to the 2017 class of IEEE fellows for his "contributions to speech recognition," according to IEEE. He is the main Instructor of 11-785, Carnegie Mellon University’s Official Deep Learning Course, which is followed by thousand of researchers worldwide.

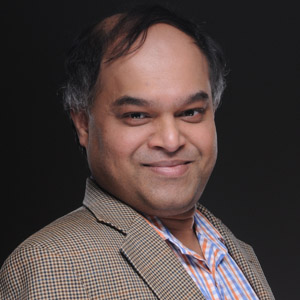

Dr. Sarabjot Singh Anand

Co-Founder and Chief Data Scientist at Tatras Data

Dr. Sarabjot Singh Anand is a Data Geek. He has been involved in the field of data mining since the early 1990s and has derived immense pleasure in developing algorithms, applying them to real-world problems and training a host of data analysts in the capacity of being an academic and data analytics consultant.

Dr. Vikas Agrawal

Senior Principal Data Scientist @ Oracle Analytics Cloud

Vikas Agrawal works as a Senior Principal Data Scientist in Cognitive Computing for Oracle Analytics Cloud. His current interests are in automated discovery, adaptive anomaly detection in streaming data, intelligent context-aware systems, and explaining black-box model predictions.

Mr. Mukesh Jain

Analytics, AI, ML & DL Leader (ex-Microsoft, ex-Jio)

Mukesh Jain is Practitioner of Analytics, AI, ML & DL Leader since 1995.

He is Technologist, Techno-Biz Leader, Data Scientist, Author, Coach and Teacher.

Professor Joao Gama

University of Porto, Director LIAAD

Joao Gama is Associate Professor of the Faculty of Economy, University of Porto. He is a researcher and the Director of LIAAD, a group belonging to INESC TEC. He got the PhD degree from the University of Porto, in 2000. He has worked in projects and authored papers in areas related to machine learning, data streams, and adaptive learning systems and is a member of the editorial board of international journals in his area of expertise.

Professor Ashish Ghosh

Indian Institute of Statistics, Kolkata

Professor Ashish Ghosh Professor in the Machine Intelligence Unit, Indian Statistical Institute, Calcutta. Short Biography: Ashish Ghosh is a Professor of the Machine Intelligence Unit and the In-charge of Center for Soft Computing Research at the Indian Statistical Institute, Calcutta.

Professor Jaime Carbonell

Director LTI, Carnegie Mellon University, USA

Jaime is Director and Founder of the Language Technologies Institute and Allen Newell Professor of Computer Science at Carnegie Mellon University. He is a world-renowned expert in the areas of information retrieval, data mining and machine translation. Jaime co-founded and took public Carnegie Group, a company in the IT services market employing advanced artificial-intelligence techniques.

Dr. Derick Jose

Co-founder, Flutura Decision Sciences & Analytics

Derick is the co-founder of Flutura Decision Sciences a niche AI & IIoT company focussed on impacting outcomes for the Engineering and Energy Industries. Flutura has been rated by Bloomberg as one of the fastest growing machine intelligence companies and its AI platform Cerebra has been certified to work with Halliburton and Hitachis platforms.

Mr. Joy Mustafi, Director and Principal Researcher at Salesforce

Visiting Scientists , Innosential

Winner of Zinnov Award 2017 - Technical Role Model - Emerging Technologies (Senior Level). Collaborated with the ecosystem by visiting around twenty-five leading universities in India as visiting faculty, guest speaker, advisor, mentor, project supervisor, panelist, academic board member, curricula moderator, paper setter and evaluator, judge of events like hackathon etc. Having more than twenty-five patents and fifteen publications on artificial intelligence in the recent past years.

Dr. Vijay Gabale

Co-founder and CTO Infilect

Deep learning enabled computer vision forms the core competence of Infilect products. Prior to cofounding Infilect, Vijay was a research scientist with IBM research. Vijay obtained his Ph.D. in Computer Science from IIT Bombay in 2012. Vijay has extensively worked on intelligent networks and systems by applying machine learning and deep learning techniques. Vijay has published research papers in top-tier conferences such as SIGCOMM, KDD and has several patents to his name.

Mr. Dipanjan Sarkar

Intel AI

Dipanjan (DJ) holds a master of technology degree with specializations in Data Science and Software Engineering. He is also an avid supporter of self-learning and massive open online courses. He plans to venture soon into the world of open-source products to improve the productivity of developers across the world.

Mr. Ajit Jaokar

Director of the Data Science Program University of Oxford

Ajit Jaokar's work is based on identifying and researching cross-domain technology trends in Telecoms, Mobile and the Internet.

Ajit conducts a course at Oxford University on Big Data and Telecoms and also teaches at City Sciences(Technical University of Madrid) on Big Data Algorithms for future Cities / Internet of Things.

Dr. Pratibha Moogi

ex-Samsung R&D

Dr. Pratibha Moogi holds PhD from OGI, School of Engineering, OHSU, Portland and Masters from IIT Kanpur. She has served SRI International lab and many R&D groups including Texas Instruments, Nokia, and Samsung. Currently she is serving as a Director in Data Science Group (DSG), in a leading B2B customer operation & journey analytics company, [24]7.ai.

Applied Machine Learning Program

Preparatory Course: Foundations of Learning AI

1. Mathematical Foundations of Data Science:

A. Linear Algebra:

- Vectors, Matrices

- Tensors

- Matrix Operations

- Projections

- Eigenvalue decomposition of a matrix

- LU Decomposition

- QR Decomposition/Factorization

- Symmetric Matrices

- Orthogonalization & Orthonormalization

- Real and Complex Analysis (Sets and Sequences, Topology, Metric Spaces, Single-Valued and Continuous Functions, Limits, Cauchy Kernel, Fourier Transforms)

- Information Theory (Entropy, Information Gain)

- Function Spaces and Manifolds

- Relational Algebra and SQL

B. Multivariate Calculus

- Differential and Integral Calculus

- Partial Derivatives

- Vector-Values Functions

- Directional Gradient

- Hessian

- Jacobian

- Laplacian and Lagragian Distribution

2: Probability for Data Scientists

- Probability Theory and Statistics

- Combinatorics

- Random Variables

- Probability Rules & Axioms

- Bayes' Theorem

- Variance and Expectation

- Conditional and Joint Distributions

- Standard Distributions (Bernoulli, Binomial, Multinomial, Uniform and Gaussian)

- Moment Generating Functions

- Maximum Likelihood Estimation (MLE)

- Prior and Posterior

- Maximum a Posteriori Estimation (MAP) and Sampling Methods

- Descriptive Statistics

- Hypothesis Testing

- Goodness of Fit

- Analysis of Variance

- Correlation

- Chi2 test

- Design of Experiments

3: Algorithms and Data Structures:

A. Graph Theory: Basic Concepts and Algorithms

B. Algorithmic Complexity

- Algorithm Analysis

- Greedy Algorithms

- Divide and Conquer and Dynamic Programming

C. Data Structures

- Array, List, Hashing, Binary Trees, Hashing, Heap, Stack etc

- Dynamic Programming

- Randomized & Sublinear Algorithm

- Graphs

4: R and Python

A. R PROGRAMMING LANGUAGE

- Vectors

- Matrices

- Lists

- Data frame

- Basic Syntax

- Basic Statistics

- Data Manipulation (dplyr)

- Visualization (ggplot2)

- Connecting to databases (RJDBC)

B. Python Programming Language

- Python language fundamentals

- Data Structures

- Beautiful Soup

- Regular Expressions

- JSON

- Restful Web Services (Flask)

- NumPy

- Plots in matplotlib, seaborn

- Pandas

5: Numerical and Combinatorial Optimization

Conjugate Gradient Methods, Quasi-Newton Methods, Constrained Optimization, Linear Programming, Nonlinear Constrained optimization, Quadratic Programming, Integer Programming, Knapsack problem, travelling salesman, vehicle routing, job shop scheduling, Gradient/Stochastic Descents and Primal-Dual methods.

Course I: Introduction to AI & Nature of Intelligence

The course offers fundamentals that you cannot find in any other course or a book. These fundamentals will be invaluable for your future work on ML and AI.

- This course explores the nature of intelligence, ranging from machines to the biological brain. Information provided in the course is useful when undergoing ambitious projects in machine learning and AI. It will help you avoid pitfalls in those projects.

- What are the differences between the real brain and machine intelligence and how can you use this knowledge to prevent failures in your work? What are the limits of today's AI technology? How to assess early in your AI project whether it has chances of success?

- What are the most fundamental mathematical theorems in machine learning and how they are relevant for your everyday work?

Course II: Exploratory Data Analysis and Feature Engineering

- Basic Plotting of Data

- Outlier Detection

- Dimensionality Reduction: Principal Component Analysis, Multidimensional Scaling

- Data Transformation

- Dealing with Missing Values

2. Feature Engineering

- Feature extraction and feature engineering,

- Feature transformation

- Feature selection

- Grid search

- Automatically create features

- Aggregations and transformations

- Introduction about Feature tools

- Introduction about Entities & Entity Sets, table Relationships, Feature Primitives, Deep Feature synthesis

Course III: Introduction to Machine Learning

1. Learning from Data:

- The appeal of learning from examples, Motivational Case Studies, A formal definition of learning, Key Components of Learning, Population vs. Sample, Decision Boundary, Types of data, Typical Issues with Data, Types of Learning

- Learning as search: Instance and Hypothesis Space, Introductions to Search Algorithms, Cost Functions

- Version Spaces/Perceptron/Linear Regression /Nearest Neighbor

- Overfitting/Regularization, Worst case performance: VC dimension, Bias Variance Tradeoff, Non Linear Embedding, Outlier Detection, Minimum description length

- Estimating Accuracy: Train/test split, Cross Validation, Bootstrap Hypothesis testing, Confusion Matrix, Sensitivity and Specificity, Precision and Recall, ROC curves and AUC, MAPE, Kappa Statistic, AIC, BIC

- Data Science Process

Introduction to Bayesian Learning:

- Probability review

- Bayes rule

- Conjugate priors

- Bayesian Inference I (coin flipping)

- Bayesian Inference II (hypothesis testing and summarizing distributions)

- Bayesian Inference III (decision theory)

- Bayesian linear regression

- Bayes classifiers

- Statistical Estimation a. Maximum Likelihood Estimation c. Bayes error rate e. Curse of dimensionality

- Laplace approximation, Naïve Bayes, Introduction to Bayesian Belief Networks, Introduction to Sampling, Gibbs sampling, Logistic regression

Course IV: Supervised Machine Learning

- Classification Algorithms: Decision Trees, Rule Induction, SVM

- Dealing with Skewed Class Distribution and Cost-based Classification: Resampling

- Regression: Lasso and Ridge Regression, MARS, OLS, PLS, GLM

- Survival Analysis: Cox’s Regression, Weibull Distribution, Parametric Survival Models

- Ensemble Models: Bagging, Boosting, Stacking, Random Forests, XGBoost, GBDT

- Neural Networks and Introduction to Deep Learning: Multi Layered Perceptron, Back propagation, Convolutional Neural Networks, Encode-Decoders, Recurrent Neural Networks, Long Short Term Memory (LSTM)

- Time Series Forecasting: Time Series Decomposition, Holt-Winters, ARIMA, Intermittent Models, Time-frequency domain, Fourier transforms, wavelet transforms, Dynamic Regression Models, Neural Networks, Demand Forecasting

- Case Study: Network Intrusion detection

- Case Study: Weather Forecasting

- Case Study: Image Classification

- Case Study: Predictive Text Generation

- Case Study: Customer Lifetime Modelling

- Case Study: Churn Prediction

- Case Study: Speech synthesis

Course V: What happens at Kaggle. Is seen by the whole world. Kaggle Competitions.

- Preprocess the data and generate new features from various sources text and images.

- Advance feature engineering techniques Generating mean-encodings, aggregated statistical measures, nearest neighbors as a means to improve your predictions.

- Cross-validation methodologies benchmark your solutions.

- Analyzing and interpreting the data. Inconsistencies, high noise levels, errors and other data-related issues such as leakages.

- Efficiently tune hyperparameters of Algorithms and achieve top performance.

- Master the art of combining different machine learning models and learn how to ensemble.

- Get exposed to past (winning) solutions and codes and learn how to read them.

Course VI: Unsupervised Machine Learning

- Clustering Methods: Expectation Maximization, K-means, k-medoids, Agglomerative and Divisive Hierarchical clustering, Birch, DBScan, Spectral Clustering, Self-organizing maps

- Community detection in graphs

- Association Rules and Sequence Pattern Discovery

- Deviation Detection

- Semi-supervised and Active Learning

- Sequential Data Models: Markov Models and Hidden Markov Models, Kalman Filters

- Model Selection: Model Comparisons, Analysis Considerations

- Case Study: Viral Marketing

- Case Study: Driving for Fuel Efficiency

Course VII: Bayesian Machine Learning

- Expectation Maximization algorithm

- Probit regression

- Expectation Maximization to variational inference

- Variational inference

- Finding optimal distributions

- Exponential families

- Conjugate exponential family models

- Scalable inference

- Bayesian nonparametric clustering

- Markov Models, Hidden Markov models

- Conditional Random Fields

- Monte Carlo, Sampling, Rejection Sampling

- Poisson matrix factorization

- Decision Networks

- Bayesian Optimization

- Bayesian State-Space Models and Kalman Filtering

- Probabilistic Numerics and Bayesian Quadrature

- Case Study: Named Entity Extraction

- Case Study: Car navigation

- Case Study: Diagnosis

Advance Machine Learning Program

Preparatory Course I: Foundations of Scalable Data Science

- The Map reduce paradigm

- Hadoop,: HDFS, Hive (SQL), Pig, Sqoop Flume, Avro

- NoSQL: Big Table, HBase, Document stores, Graph stores, Key-Value stores

- Spark and Introduction to PySpark

2. Functional Programming in Scala

Functions, Data and Abstractions, collections, pattern matching and functions, Lazy Evaluation, Functions and State, Observer Pattern, reactive programming,Parallel Programming, Data Parallelism, Data Structures for Parallel Computing, RDDs,pair RDDs, Reduction Operations, Partitioning and Shuffling,Dataframes, Spark SQL

3. Design Thinking and Storytelling

Preparing your mind for innovation, Idea Generation and Experimentation

Preparatory Course II: Statistics of NLP

- Statistical Language Modeling

- Computational Linguistics

- Statistical Decision Making and the Source-Channel Paradigm

- Sparseness; Smoothing

- Measuring Success: Information Theory, Entropy and Perplexity Maximum Entropy Models, Whole-Sentence Models, Semantic Modeling

- EM for sound separation

- Probabilistic Context Free Grammars (PCFG), the Inside-Outside Algorithm

- Syntactic Language Models

- Decision Tree Language Models

Course I: Scalable Machine Learning

- Multitask Learning

- Asynchronous Gradient Decent: Hogwild!, Momentum, Gibbs Sampling, Cyclades

- Hyperparameter Optimization

- Low-Precision Training

- Matrix Completion and Approximation

- Tensor Factorization

- On-device Inference

- Performance Optimizers

- Local Learning

- Feature Selection

- Reinforcement Learning Optimization

- Distributed Reinforcement Learning

- Scalable Variational Inference

- Interpretable ML

- ADMM and its connections to belief propagation

- The nonconvex landscape of neural networks

Course II: Data Streams

- Data Streams Computational Model

- Change Detection

- Classification

- Regression

- Clustering Data Streams

- Frequent Pattern Mining

- Case Study: IOT Data Processing

Course III: Natural Language Processing

1. Deep Neural Networks for Natural Language Processing. (8 Hours)

A. Introduction

- Introduction to Neural Networks

- Example Tasks and Their Difficulties

- What Neural Nets Can Do To Help

B. Predicting the Next Word in a Sentence

- Computational Graphs

- Feed-forward Neural Network Language Models

- Measuring Model Performance: Likelihood and Perplexity

C. Distributional Semantics and Word Vectors

- Describing a word by the company that it keeps

- Counting and predicting

- Skip-grams and CBOW

- Evaluating/Visualizing Word Vectors

- Advanced Methods for Word Vectors

D. Why is word2vec So Fast?: Speed Tricks for Neural Nets

- Softmax Approximations: Negative Sampling, Hierarchical Softmax

- Parallel Training

- Tips for Training on GPUs

E. Convolutional Networks for Text

- Bag of Words, Bag of n-grams, and Convolution

- Applications of Convolution: Context Windows and Sentence Modeling

- Stacked and Dilated Convolutions

- Structured Convolution

- Convolutional Models of Sentence Pairs

- Visualization for CNNs

F. Recurrent Networks for Sentence or Language Modeling

- Recurrent Networks

- Vanishing Gradient and LSTMs

- Strengths and Weaknesses of Recurrence in Sentence Modeling

- Pre-training for RNNs

G. Using/Evaluating Sentence Representations

- Sentence Similarity

- Textual Entailment

- Paraphrase Identification

- Retrieval

H. Conditioned Generation

- Encoder-Decoder Models

- Conditional Generation and Search

- Ensembling

- Evaluation

- Types of Data to Condition On

I. Attention

- Attention

- What do We Attend To?

- Improvements to Attention

- Specialized Attention Varieties

- A Case Study: "Attention is All You Need"

2. Foundations of Natural language Processing

- Word embedding

- Named entity recognition

- Parts-of-Speech tagging

- Language modeling

- Segmentation

- Paraphrasing

- Machine translation

- Information Extraction

- Text Summarization

- Conditional Random Fields

- Dimensionality Reduction: Matrix Factorization, Topic Models

- Case Study: Q & A Systems

- Case Study: Spam Detector

3. Text Classification

- Tokenization

- Lemmatization

- Vectorization

- Bag of Words representation

- Language Models

- Tfidf

- Singular Value Decomposition

- Topic Models

- Discourse Modelling

- Coreference Resolution

- Question Answering Systems

- Visualizing complex and high dimensional data

- Sentiment Analysis

- Case Study: Web Personalization

- Case Study: Text Classification

Course IV: Recommender Systems

- Content Based Filtering

- User and Item based Collaborative Filtering

- New Item Problem

- ALS

- Conversational Recommenders

- Diversity in Recommendation

Course V: Analysis of Large Data Sets

- Frequent Itemset Mining

- Locality-Sensitive Hashing

- Dimensionality Reduction

- Algorithms on Large Graphs

- Large-Scale Machine Learning

- Computational Advertising

- Learning through Experimentation

- Optimizing Submodular Functions

Course VI: Social Network Analysis

- Introduction and Structure of Graphs

- Web as a Graph and the Random Graph Model

- The Small World Phenomena

- Decentralized search in small-world and P2P networks

- Applications of Social Network Analysis

- Networks with Signed Edges

- Cascading Behavior: Decision Based Models of Cascades

- Cascading Behavior: Probabilistic Models of Information Flow

- Influence Maximization

- Outbreak Detection

- Power-laws and Preferential attachment

- Models of evolving networks

- Kronecker graphs

- Link Analysis: HITS and PageRank

- Strength of weak ties and Community structure in networks

- Network community detection: Spectral Clustering

- Biological networks, Overlapping communities in networks

- Representation Learning on Graphs

Our Address :

2/3, 2nd Floor, 80 Feet Road, Barleyz Junction, Sony World Crossing, Above, KFC, Koramangala, Venkappa Garden, Ejipura, Bengaluru, Karnataka 560034